Thick and densely structured biological tissues – like brain slices, tumors, or organoids – have long posed a challenge to optical microscopy. Conventional laser scanning methods often trade resolution for contrast, or vice versa, particularly when trying to image fine details buried beneath layers of background fluorescence.

Now, researchers at the Istituto Italiano di Tecnologia (IIT) in Genoa have developed a new image scanning microscopy (ISM) technique that bypasses this trade-off altogether. The method, described in Nature Photonics and shared as open-source software, uses structured detection to simultaneously achieve super-resolution and optical sectioning.

We spoke with Alessandro Zunino, first author of the study and postdoctoral researcher at IIT’s Molecular Microscopy and Spectroscopy Lab, to understand how this approach works, what makes it different, and where it could take fluorescence microscopy next.

What problem in microscopy were you trying to solve with this work?

One of the main activities of the lab is super-resolution fluorescence microscopy imaging, which typically uses high numerical aperture (NA) objective lenses. At high NA, the depth-of-field (DoF) of the microscope is shallow, implying that even relatively thin specimens, such as a single cell, can generate an important defocused background. With even more complex samples, such as multi-cellular tissues, the out-of-focus light can be dominant, effectively covering the details of the specimen and jeopardizing any attempt to achieve super-resolution – i.e., spatial resolution behind the diffraction limit.

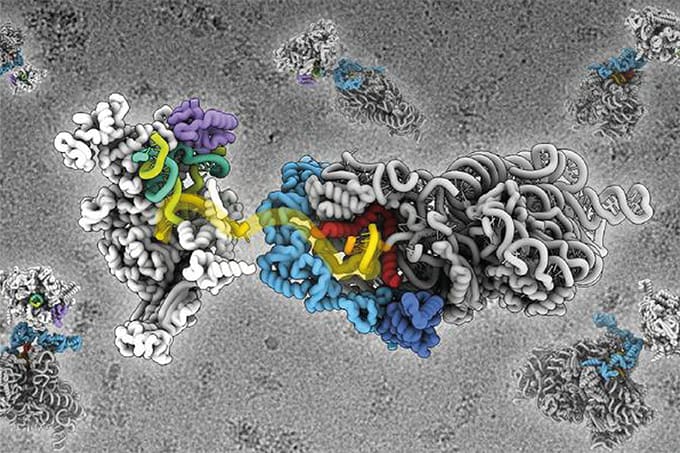

The conventional solution for laser scanning microscopes is to introduce a pinhole – namely, a micrometer-sized aperture – before the detector. The pinhole acts as a spatial filter that partially blocks out-of-focus fluorescence from reaching the detector. However, this strategy cannot completely block the out-of-focus background and inevitably deteriorates the signal-to-noise ratio (SNR) of the images. Image scanning microscopy (ISM) is essentially an evolution of confocal microscopy, where the pinhole and the bucket detector are replaced by a pixelated detector – in our case a asynchronous-readout single photon avalanche diode (SPAD) array detector. Each sensitive element acts as a small pinhole, enhancing the resolution up to twice the diffraction limit. At the same time, all the elements collect light from a different position of the detector plane, providing high SNR. This makes ISM a perfect tool to achieve super-resolution with a simple and effective optical architecture. However, conventional ISM reconstruction methods are not able to provide optical sectioning, limiting the effectiveness of ISM to thin samples. The previously adopted solution was to limit the size of the detector, exploiting the old pinhole strategy. Thus, even if ISM solves the ancient trade-off between resolution and SNR typical of confocal microscopy, another trade-off was still unsolved: the one between optical sectioning and SNR. A new approach was needed.

How does your method achieve both sharpness and depth without altering the physical setup?

The key element is the combination of structured illumination and structured detection. Indeed, we excite the sample point-by-point with a focused excitation beam and, for each scan coordinate, we collect the emitted fluorescence light with a detector array, capable of measuring how light is distributed in space. With a full scan of the field-of-view, the ISM microscope returns a set of twenty-five images, one for each element of the array detector, that describe the same sample observed from a slightly different point of view. Thus, the raw images are similar but have different information content. The key observation that enabled our work was that the raw images have a different contrast, with the one generated by the central one being the brightest. The modulation is given by what we call the fingerprint function, which we can mathematically prove to be completely independent from the lateral structure of the specimen and depends uniquely on the point spread functions (PSF) of the microscope. Since the fingerprint is a proxy of the PSF, it enlarges when the emitters are out of focus. Therefore, we could design a reconstruction algorithm that exploits this information to unmix the out-of-focus contribution from the in-focus signal. That was a powerful observation: we do not need to rely on any specific feature of the specimen, so we can be general and provide true optical sectioning.

How does your approach compare to other super-resolution and sectioning techniques?

Optical sectioning itself is not a novelty. Many imaging modalities enable it, such as selective plane illumination microscopy (SPIM), structured illumination microscopy (SIM), and 4Pi microscopy. Despite being very effective, they come with their own challenges and limitations. Indeed, these techniques can often be used only with a limited selection of specimens, or they require high technical complexity. Instead, the laser scanning architecture is very simple and is also very versatile. It can be easily combined with different advanced imaging techniques, such as fluorescence lifetime imaging (FLIM), multi-photon excitation (MPE), and stimulated emission depletion (STED). Thus, we believe the laser scanning architecture to be extremely useful and powerful, as demonstrated by the undisputed success of confocal microscopy in the past decades.

With the increasing pervasively of image processing, many researchers have proposed algorithms to remove out-of-focus background from a single image, regardless of the microscopy modality. These approaches, being entirely computational, are extremely simple and cheap. However, optical sectioning from a single 2D acquisition is forbidden by a physical phenomenon known as the missing cone. Therefore,this family of methods tries to remove the background by exploiting some prior information on the structure of the specimen. Typically, they assume that the out-of-focus background is large and homogeneous, while the in-focus part of the image is composed of sharp and small structures. In some specific cases, these assumptions are correct and can help enhance the contrast of the images. However, in many other cases, these assumptions do not hold (think about large cell bodies). Therefore, pure computational approaches are not general and cannot be considered true optical sectioning methods.

Did anything unexpected emerge as you developed or tested the method?

The first step of the project was to develop the theory and the algorithm on paper. Then, we implemented the code and used it first on simulated and then on experimental data. When we obtained the first results, we were astonished by the resolution and optical-sectioning improvement our method was able to achieve. Then, we tested the same approach on conventional confocal images, and it failed. At that point, it was clear that the only reason why we could unmix the in-focus from the out-of-focus component of the acquisition was only because of the additional information provided by structured detection. That was the demonstration that ISM inherently enables optical sectioning without pinholes or other variations of the optical setup.

What motivated the decision to release this method as open science?

We firmly believe that science should always be open and fully reproducible. Furthermore, we designed our method not only for developers but also for microscopy users. Scientists who want to use our method for their own research can benefit from a user-friendly Python code without the need to re-implement the whole method from scratch. Furthermore, thanks to the commercialization of small and fast SPAD array detectors, ISM is becoming increasingly popular and rapidly replacing conventional confocal imaging. Many companies now provide either an ISM add-on or a full microscope. If users can access the raw data, they can also use the latest reconstruction methods, such as ours.

What applications do you see as the most promising in the near term?

Our method enables super-resolution and optical sectioning from 2D acquisitions, while boosting the SNR. These features make s2ISM especially suited for long-term live-cell imaging. Indeed, the dynamics of a specimen – such as the localization of sub-cellular structures or the phenotype of a cell – can be gently observed for a long time with the specificity provided by the sectioning effect we demonstrated.

What future directions are you most excited to pursue with this technology?

Image scanning microscopy is a powerful technology and with our latest improvement it is now even more powerful. However, the temporal resolution – i.e., the frame rate – is still limited by the scan speed. Parallelization through multi-foci excitation is a promising path to increasing the imaging speed. With the recent commercialization of Megapixel SPAD arrays, most of the current features of ISM will be retained. Furthermore, more contrast mechanisms other than fluorescence are now emerging. Label-free non-linear effects – such as Raman and Brillouin scattering – are capable of providing high-level molecular specificity. Generalizing s2ISM beyond fluorescence is another interesting path to explore. Finally, reconstruction methods such as ours require the knowledge of the PSF of the microscope. This latter can be measured experimentally, but noise and other practical aspects can compromise the fidelity of the measurement. As an alternative, it is possible to numerically simulate the PSF. However, optical aberrations might alter the shape, decreasing the fidelity once again. Thus, wavefront sensing or adaptive optics methods will be paramount to increase the robustness of ISM when used to perform deep tissue imaging.